Table of Contents >> Show >> Hide

- Why Analytics Matters So Much for Product Managers

- Metrics, KPIs, and North Star Metrics: Getting the Basics Straight

- What Product Managers Should Actually Track

- Using Frameworks: AARRR, Funnels, and the North Star

- How to Go From Data to Decisions

- Common Analytics Pitfalls (And How to Avoid Them)

- Collaborating Across Teams Using Analytics

- Real-World Experiences: Making Analytics Work Day-to-Day

- Conclusion

Being a product manager today means living in a world where dashboards multiply faster than your backlog tickets.

There’s a graph for everything: daily active users, weekly active users, users who thought about becoming active but

then went for coffee instead… It’s easy to drown in data and still have no idea what to do on Monday morning.

The real skill isn’t “knowing analytics tools.” It’s knowing what to track and, even more importantly,

how to act on what you see. When you get analytics right, you can align your team, ship the right things

faster, and stop arguing about whose “gut feeling” is better.

In this guide, we’ll walk through the essentials of analytics for product managers: the core metrics to track,

how to structure them using proven frameworks, and a practical approach to turning insights into action.

We’ll also dig into real-world lessons so this doesn’t stay in “strategy slide” territory.

Why Analytics Matters So Much for Product Managers

Product analytics is simply understanding how people actually use your product not how you hope they use it.

It shows which features create value, where users struggle, and how changes impact behavior over time.

For product managers, analytics helps you:

- Validate decisions instead of relying purely on opinions and assumptions.

- Spot opportunities for growth, adoption, and monetization earlier.

- Prioritize work based on measurable impact, not who escalates loudest on Slack.

- Align teams around shared goals and numbers everyone can see.

The challenge? You can’t track everything. If you try, you’ll end up with what many teams already have:

40+ charts, no clear story, and a PM quietly wondering why nothing feels actionable.

Metrics, KPIs, and North Star Metrics: Getting the Basics Straight

Metrics vs KPIs

A metric is any measurable value: number of signups, sessions per user, feature clicks, etc.

A Key Performance Indicator (KPI) is a metric you’ve promoted to “this actually matters.”

KPIs are tied directly to goals and success criteria; metrics are simply the raw ingredients.

Example:

- Metric: Number of emails sent by users this week.

- KPI: Percentage of new users who send at least one email in their first 7 days.

Both are useful, but the KPI tells you whether your onboarding and early experience are actually working.

What Is a North Star Metric?

A North Star Metric (NSM) is the single metric that best captures the value your product delivers

to customers and aligns with long-term business outcomes.

Good North Star Metrics:

- Are directly tied to customer value (not just revenue).

- Are leading indicators of success, not lagging vanity numbers.

- Are understandable to everyone across the company.

Classic examples include:

- Spotify: Time spent listening.

- Airbnb: Nights booked.

- Collaboration tools: Number of active teams completing X meaningful actions per week.

Your NSM becomes the anchor for all other metrics. Think of it as your headline, with other metrics acting as

supporting paragraphs that explain “why” and “how.”

What Product Managers Should Actually Track

There are hundreds of possible metricsbut most PMs can start with a focused set grouped into a few key areas:

acquisition, activation, engagement, retention, satisfaction, and business impact.

1. Acquisition: How People Discover and Sign Up

Acquisition metrics tell you whether you’re successfully attracting the right users.

- New signups / new accounts: How many people are starting their journey?

- Traffic by source: Organic, paid, referrals, partnerships, etc.

- Customer Acquisition Cost (CAC): How much you spend to acquire a paying customer.

If acquisition is healthy but everything else looks bad, you don’t have a marketing problemyou have a

product experience problem after signup.

2. Activation: The First “Aha!” Moment

Activation is when a user experiences clear value for the first time. Your activation metrics

should reflect that “aha” moment.

- Activation rate: Percentage of new users who complete the key value-driving action.

- Time to value (TTV): How long it takes for a user to reach that action.

- Onboarding completion: Percentage of users who finish your onboarding flow.

For a project management tool, activation might be “creates a project and invites at least one collaborator.”

For an analytics platform, it might be “installs tracking and views the first live dashboard.”

3. Engagement & Adoption: Are Users Really Using Your Product?

Engagement metrics tell you whether people are building habits around your product.

- DAU / MAU (Daily / Monthly Active Users): How many users are actively using the product.

- Stickiness: DAU divided by MAU, often indicating how many monthly users are active daily.

- Feature adoption rate: Percentage of active users using a specific feature.

Feature adoption is especially useful to PMs: it can show whether your shiny new feature is quietly thriving,

confusing everyone, or sitting like digital furniture no one touches.

4. Retention & Churn: Do Users Come Back?

If activation is about first value, retention is about sustained value.

- Retention rate: Percentage of users who remain active over a given period (e.g., 30, 60, 90 days).

- Churn rate: Percentage of users or customers who stop using or cancel in a period.

- Cohort retention: How different signup cohorts retain over time.

Cohort analysis is powerful. If retention improved for users who joined after a new onboarding redesign,

you have real evidence that your work mattered.

5. Customer Satisfaction & Experience

Happiness is harder to measure than clicks, but it’s just as critical. Common PM satisfaction metrics include:

- Net Promoter Score (NPS): How likely users are to recommend your product to others.

- Customer Satisfaction (CSAT): Post-interaction or feature-specific satisfaction ratings.

- Customer Effort Score (CES): How easy users find a task or flow.

Combine these quantitative signals with qualitative feedbacksupport tickets, user interviews, open-ended survey responses

to understand the “why” behind the scores.

6. Revenue & Unit Economics

At some point, someone will ask, “So how is this affecting revenue?” Good product analytics doesn’t ignore the money.

- Monthly Recurring Revenue (MRR) / Annual Recurring Revenue (ARR).

- Average Revenue Per User (ARPU) or per account.

- Customer Lifetime Value (CLV).

- Churned revenue vs. expansion revenue.

These help you answer whether you’re building a product that is not just loved, but also sustainable and scalable.

7. Task Success & UX Quality

Finally, don’t forget task-level metrics that show whether users can actually do the things your product promises.

These include task completion rate, time to complete a task, and error rate.

For example, in a checkout flow:

- What percentage of users successfully complete checkout?

- Where do they drop off?

- How long does it take from cart to confirmation?

These metrics are gold when collaborating with UX and engineering on usability improvements.

Using Frameworks: AARRR, Funnels, and the North Star

AARRR (Pirate Metrics) for the Full User Journey

The AARRR framework (yes, say it like a pirate) stands for Acquisition, Activation, Retention,

Referral, and Revenue. It helps you structure your analytics along the entire customer lifecycle.

For each stage, define:

- A clear goal.

- One or two primary metrics.

- Supporting diagnostic metrics.

Example for a SaaS collaboration tool:

- Acquisition: Signups from organic search.

- Activation: Teams that create their first project and invite a teammate.

- Retention: Teams active in at least one project every week.

- Referral: Invites sent per active team.

- Revenue: MRR from active paying teams.

Funnels & Cohorts for Diagnostics

Funnels show how users move through a sequence of steps (for example, landing page → signup → onboarding step 1 →

onboarding step 2 → first key action). They’re perfect for spotting where you lose people.

Cohorts group users by a shared attribute (signup month, acquisition channel, pricing plan) and show behavior over time,

such as retention or expansion. Together, funnels and cohorts turn vague problems (“onboarding is weak”) into precise ones

(“users from paid search drop 40% at step 3”).

North Star Metric & Input Metrics

Your North Star Metric is the big “what,” but you still need the “how.” That’s where input metrics come in.

Input metrics are the controllable drivers that influence your North Star. For example:

- NSM: Weekly active teams collaborating on projects.

- Input metrics:

- Number of new projects created per week.

- Average teammates invited per new project.

- Percentage of teams using core collaboration features (comments, file sharing, etc.).

Product teams can own and experiment on input metrics while keeping the North Star as the ultimate scoreboard.

How to Go From Data to Decisions

Tracking metrics is the easy part. Acting on them is where things get interesting. A simple cycle you can use:

- Start with a clear objective. “Improve new-user activation by 10% in Q1.”

- Define the key metrics. Activation rate, time to value, onboarding completion.

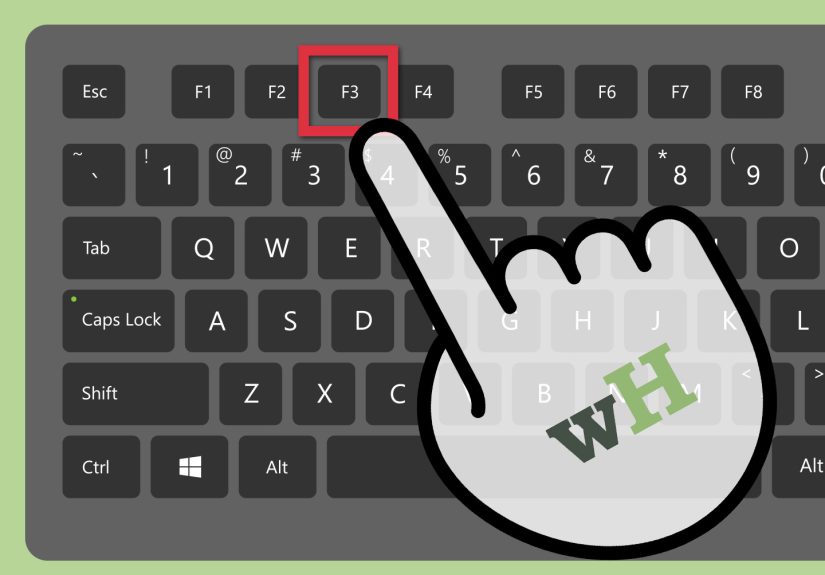

- Instrument and validate your data. Make sure events and properties are correct and trustworthy.

- Identify the biggest friction points. Use funnels, session data, and qualitative feedback.

- Generate hypotheses. “If we simplify step 3 and add in-product guidance, activation will increase.”

- Run experiments. A/B tests where possible; phased rollouts when not.

- Measure impact. Compare against baseline and cohorts.

- Decide and iterate. Double down, refine, or scrap based on the data.

Best-practice guides consistently emphasize focusing on actionable insights, balancing quantitative data

with qualitative research, and using analytics in a continuous feedback loop rather than a one-off audit.

A Quick Example: Fixing Low Activation

Imagine you own onboarding for a B2B SaaS product and notice:

- Signups are growing steadily.

- Activation rate is stuck at 30%.

- Retention beyond 30 days is poor for unactivated users (no surprise).

You dig into your funnel and discover a steep drop-off on step 3 of onboarding, where users must configure multiple

settings before they see any value.

You run experiments:

- Skip non-essential settings and let users start with sensible defaults.

- Add a “guided setup” checklist with progress indicators.

- Trigger a contextual tooltip highlighting the first key action.

Post-experiment, activation climbs from 30% to 43%, and 30-day retention follows the same upward trend.

Now you’re not just “looking at data”you’re using it as a steering wheel.

Common Analytics Pitfalls (And How to Avoid Them)

-

Tracking everything, prioritizing nothing: Limit yourself to a focused set of KPIs and supporting metrics.

Everything else can live in a “debug dashboard.” -

Vanity metrics obsession: Pageviews or raw signups feel good but don’t always map to value.

Tie metrics to activation, retention, or revenue whenever possible. - Ignoring qualitative insights: Numbers tell you what is happening; users tell you why.

- Analysis paralysis: If your analytics doesn’t lead to a decision or experiment, you’re probably going too deep for the question at hand.

- Data silos: Work with data, engineering, and marketing to build shared definitions and single sources of truth.

Collaborating Across Teams Using Analytics

Analytics is a team sport. The best product managers don’t “own the numbers” in isolationthey use them as a shared language.

Some practical ways to collaborate:

-

With engineering: Align on tracking requirements early in the spec. Make instrumentation part of “done,”

not a nice-to-have. - With design: Use task success metrics and user behavior patterns to prioritize UX improvements.

- With marketing: Connect acquisition efforts to downstream metrics like activation, retention, and revenue.

- With leadership: Report progress using the North Star and a handful of key KPIs, not a dump of every chart you have.

Over time, you want analytics to become less “the PM’s thing” and more “how we all decide what to build and improve.”

Real-World Experiences: Making Analytics Work Day-to-Day

Theory is nice, but the reality of analytics for product managers is often messier: incomplete data,

shifting goals, and dashboards that mysteriously break right before your big review meeting.

Here are some experience-based lessons that tend to hold up across products and teams.

Start Smaller Than You Think

Many PMs kick off an analytics initiative by trying to track everything at once. The intent is good

(“we need visibility”), but the result is usually chaos: half-instrumented events, confused stakeholders,

and a backlog full of “please fix the numbers” tickets.

A more practical approach is to start with one key objective and 3–5 supporting metrics.

For example, if your focus this quarter is activation, ignore deeper engagement and revenue metrics for a moment.

Measure only what helps you understand and improve those first steps. Once that flow is reliable and improved,

then you can expand.

Make Definitions Painfully Explicit

Nothing derails a meeting faster than discovering that different teams mean different things by “active user.”

One of the highest-impact, low-glamour tasks you can do as a PM is to drive alignment on metric definitions:

- What exactly counts as an “active user” (time window, action types)?

- What qualifies as “churned”no login for 30 days, 60 days, or a cancelled subscription?

- How is MRR calculatednet of discounts, including trials, by account or seat?

Publish definitions in a simple, shared document and link to it in dashboards.

This reduces arguments and makes your analytics instantly more trustworthy.

Use Analytics to Ask Better Questions, Not Just Answer Them

Experienced PMs don’t open dashboards hoping for a magical answer. Instead, they arrive with a question:

“Why did retention drop for small teams?”, “What changed for users acquired via this new channel?”,

“Which parts of onboarding are most predictive of long-term use?”

When you treat analytics as a tool for structured curiosity, you avoid the trap of staring at graphs until

you see shapes that confirm your existing opinions. Data should challenge you at least as often as it reassures you.

Pair Quantitative Data With Real User Stories

One of the most practical habits is pairing every major metric review with at least a handful of concrete user stories:

- A recent support ticket that reflects a common pattern.

- A highlight from a usability test or interview.

- An anonymized user journey showing clicks, errors, and session length.

This combination keeps your team grounded. “Activation dropped 8%” sounds abstract;

“Users hit a confusing permissions screen and bounce at step 2” feels solvable.

Celebrate Learning, Not Just Winning Experiments

In real life, many experiments don’t move metrics in the direction you expected.

That’s not failureit’s tuition. The most effective PMs create a culture where

a carefully run experiment that proves a hypothesis wrong is still considered a win because it sharpens the team’s understanding.

For example, say you test a shorter signup form because you’re convinced it will boost conversions.

Instead, conversion rates don’t budge, but you realize that users are dropping after signup

due to a confusing first-run experience. Now you know where to focus next.

Turn Dashboards Into Rituals

Dashboards have the most impact when they’re part of your regular rhythms, not something you open

only when something is on fire. Many teams benefit from:

- Weekly metric reviews tied to specific goals (e.g., “activation hour” every Tuesday).

- Monthly deep dives into one critical area (retention, acquisition quality, feature adoption).

- Quarterly retrospectives that connect product bets to actual metric changes.

Over time, this turns analytics from a “thing we check sometimes” into a core part of how the team runs.

Accept That the Data Will Never Be Perfect

Finally, a realistic reminder: your data will never be 100% clean. There will always be gaps, tracking issues,

or legacy events you wish someone had named differently. Experienced PMs aim for “reliable enough to make a decision,”

not “perfect to the last decimal.”

When something looks off, investigate. When the difference is small and unlikely to change the decision, move forward.

The ultimate goal of analytics isn’t pristine dashboardsit’s better products and happier customers.

Conclusion

Analytics for product managers isn’t about memorizing formulas or showing off complex dashboards.

It’s about focusing on the right metrics, using proven frameworks like AARRR and North Star Metrics,

and building habits that turn numbers into action.

Track what matters across acquisition, activation, engagement, retention, satisfaction, and revenue.

Make your metric definitions clear, your goals explicit, and your experiments deliberate.

Combine quantitative data with real user stories, and treat analytics as a way to continuously learn about your product.

Do that consistently, and analytics stops being intimidatingand starts being one of the most powerful tools

you have as a product manager.